- Published on

Large Language Models - The Basics

- Authors

- Name

- Simon Håkansson

- @0fflinedocs

Large Language Models - The Basics

Large language models (LLMs) generate responses based on statistical probability, with varying degrees of confidence depending on the patterns in their training data. They excel at tasks like brainstorming, ideation, and writing assistance, offering multiple perspectives and creative solutions.

However, Large language models have limitations, such as relying on pattern recognition rather than verified knowledge, which can lead to confidently presented but incorrect information. They also require clear guidance and context to understand real-world nuances.

NOTE

Domain knowledge remains essential when working with LLMs, especially in technical tasks since the results should be verified!

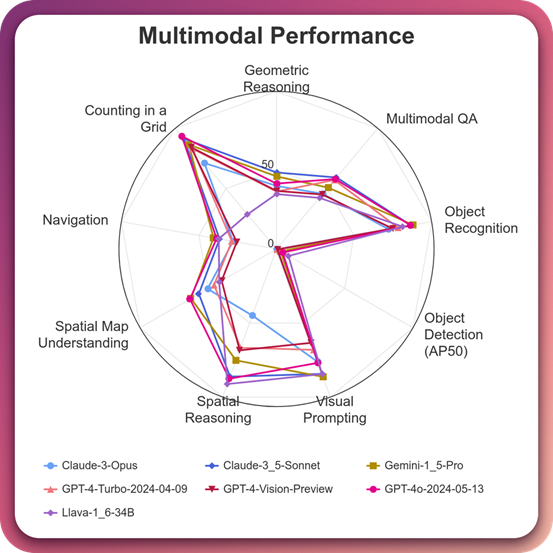

Performance

Different Large Language Models perform differently on certain types of tasks. Sometimes new versions of models also makes them better at one specific domain, but might be causing a setback in another domain. So it's important to find the right model for the right task.

Tokenization

Source from OpenAI.

Source from OpenAI.Context windows define the maximum number of tokens an LLM can process at once - for example, a 16K context window means the model can handle approximately 16,000 tokens simultaneously. Larger context windows allow LLMs to "remember" more information from earlier in a conversation or document, enabling more coherent responses across longer interactions.

Hallucinations

Large language model hallucination is an occurrence that can happen, especially when the training data is large and include many variations (such as the public internet). When hallucinations happen, the language models generate incorrect information with high confidence despite it not being supported by their training data. It stems from the models' tendency to prioritize fluent, plausible-sounding responses over factual accuracy when faced with uncertainty.

Understanding Probability in LLMs

Large language models operate on statistical probability to generate responses. This probability-based approach has varying degrees of confidence depending on the patterns in the training data.

High Confidence Patterns When asked a question like "What's the capital of Sweden?", an LLM is very likely to respond with "Stockholm." This confidence stems from this fact appearing thousands of times consistently in its training data, creating a strong pattern.

Low Confidence Patterns However, for questions like "What's the best business strategy?", an LLM will generate multiple possible answers based on similar patterns it has seen. This occurs because there are many valid approaches with different probability scores, leading to less certainty in the response.

Capabilities & Limitations

Strengths

Large language models excel at several tasks that can significantly enhance your productivity:

Brainstorming & Ideation

- Large language models excel at generating diverse perspectives on a topic, helping you discover creative solutions beyond your initial thinking.

- When you are stuck on a problem or need new ideas, these models can provide multiple angles and approaches to get your creativity flowing again.

- They serve as valuable problem-solving tools by offering alternative viewpoints that might not have occurred to you naturally.

Writing Assistance

- Assist on structuring, draftin documents and streamlining communication tasks.

- Large language models can generate well-commented code when properly instructed, enhancing code readability and understanding.

- When given clear guidance, they adapt their responses to meet specific requirements, offering customized outputs based on your needs.

Weaknesses

Despite their impressive capabilities, large lange models have important limitations to keep in mind:

Pattern Recognition vs. Verified Knowledge

- Works on recognizing patterns rather than accessing verified facts.

- Can present information confidently thats incorrect

- Use large language modles to guide you rather than replace verification, especially in technical areas.

Context Understanding

- Real-world nuance might be missed

- Require clear guidance and context for qualititative results

Conclusion

Large Language Models operate probalistically and makes "best guesses" based on the data their trained on. It's critical to assess and verify the information that are returned from the prompts. Domain knowledge also helps (for example, knowing what permissions actually are least privilege, what's a recommended way of authenticating securely etc). Use the strengths of the models and understand their weaknesses. Be sure to validate your code before you deploy it in production, or valide your information before share it!

What can we then do to increase the chances of success when we engage with large language models? Adding context! I'll cover this in my next blogpost: Beyond Basic Prompts: Leveraging Context and RAG.